The AI Revolution is Upon Us—And UC San Diego Researchers Are Using it to Inform Climate Action

by Sara Bock - sbock@ucsd.edu

A rapid acceleration in the development and accessibility of artificial intelligence tools has taken the world by storm in recent months, spurring many experts to conclude that the AI revolution has arrived—and leaving us all wondering what the implications are for the future.

Generative AI models like ChatGPT are dominating much of the current discussion, and perhaps rightfully so: In a world where machines are capable of thinking like humans, where do we draw the line between reality and illusion? But with the availability of massive datasets and advances in high-performance computing that enabled this disruption of the status quo, also comes an opportunity to leverage the power of AI, in its various forms, to make a positive impact on society.

That’s exactly what’s happening at UC San Diego, where researchers across a range of fields are working together to develop and implement AI-assisted tools and machine learning methods that will enable scientific discoveries at an unprecedented pace. When it comes to significant global challenges like climate change, time is of the essence: From extreme weather and marine heat waves to wildfires and catastrophic flooding, the effects of a warming planet become more pronounced each year. With the culture of collaboration that underlies UC San Diego’s $1.64 billion research enterprise, and a long history of leading the way in both AI and climate research, there’s no better place to uncover unique and innovative solutions.

“At UC San Diego, we are proud to have been on at the forefront of AI research for over four decades, and we continue to believe that this technology can play a critical role in addressing global challenges across a variety of disciplines,” said Tara Javidi, a Jacobs Family Scholar and professor in the Department of Electrical and Computer Engineering and Halıcıoğlu Data Science Institute.

“One of our major initiatives in this area is the Eric and Wendy Schmidt AI in Science Postdoctoral Fellowship program, which is training a new generation of STEM postdoc scholars who are integrating AI with science and engineering research. From studying coral reef adaptation, to carbon capture, to enhancing lithium-ion battery technologies, our postdocs are putting AI in use to address many facets of climate change,” said Javidi, who leads the Schmidt AI in Science Postdoctoral Fellowship program. “With a cadre of world-renowned researchers committed to public good, UC San Diego is uniquely positioned to leverage rapidly developing tools in AI to address pressing issues facing humanity.”

The following are just a few of the many research teams across UC San Diego that are using AI in interdisciplinary work that aims to improve our understanding of the threats facing our planet—and what we can do to better protect it:

“With a cadre of world-renowned researchers committed to public good, UC San Diego is uniquely positioned to leverage rapidly developing tools in AI to address pressing issues facing humanity.”

Photo by Brian Zgliczynski

Understanding climate impacts on coral reefs

Coral reefs, which sustain a significant percentage of the Earth’s biodiversity, have commonly been referred to as the first ecosystem-wide casualty of climate change. But in the Sandin Lab at UC San Diego’s Scripps Institution of Oceanography, a team of researchers—with the assistance of artificial intelligence tools—are finding out that it’s a bit more complicated than that.

For nearly a decade, marine ecologist Stuart Sandin has led the charge on monitoring individual corals around the world through his 100 Island Challenge project. At Palmyra Atoll, one of their key survey sites in the Pacific, he and his team used time-series images to assess the effects of a 2016 marine heat wave that spurred a significant bleaching event across the reef. As the planet warms, it’s a phenomenon scientists say will become more frequent, with increases in ocean temperatures causing corals to become stressed to the point that they expel the algae in their tissues and turn completely white.

Over the years that followed, however, the researchers witnessed those same corals undergo significant recovery and growth. So what is it about certain corals that enables them to survive, and even grow, following marine heat waves and other climate-induced stressors?

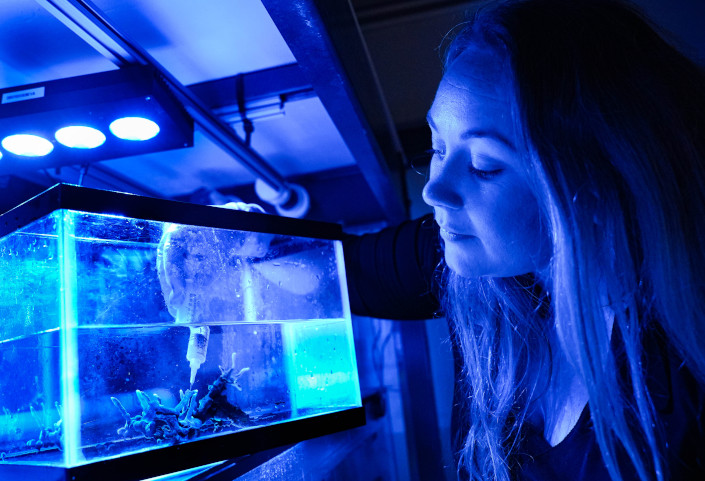

In the Sandin Lab at Scripps Institution of Oceanography, postdoctoral researcher Beverly French takes a tissue sample from a coral for metagenomic sampling. (Photo by Sapna Parikh/UC San Diego)

That’s a question that researchers in Sandin’s lab are working to answer. One of these researchers is Beverly French, a member of the inaugural cohort of fellows selected for the Eric and Wendy Schmidt AI in Science Postdoctoral Fellowship. Her work is funded as part of a $148 million initiative to support postdoctoral researchers who are applying AI tools in scientific research at nine top universities worldwide.

French has long been intrigued by the fact that coral reefs have existed for hundreds of millions of years, surviving significant environmental disruptions and mass extinction events.

“I think there are things that we can really learn from corals about how organisms might be able to persist in an environment that is increasingly changing and experiencing more severe climate disturbances,” French said. Building on the work of the 100 Island Challenge, she’s incorporating metagenomic sampling, which involves extracting DNA from corals, to further the scientific community’s understanding of what makes the entire coral holobiont—coral, its symbiotic algae and microbiome—survive and adapt.

Key to this effort is layering her findings with other relevant data: namely, from the large-area imagery collection and processing that researchers in Sandin’s lab have been conducting for years as they track changes in reefs over time. Their work initially involved manually tracing corals from underwater images collected during site surveys and interpreting those images to identify coral species. But with nearly a million corals across 100 islands, that’s a massive amount of data to process. Now, this time-consuming work is performed with the help of AI-assisted tools that can extract these data from imagery, accelerating the researchers’ workflows and, effectively, the process by which scientific discoveries are made.

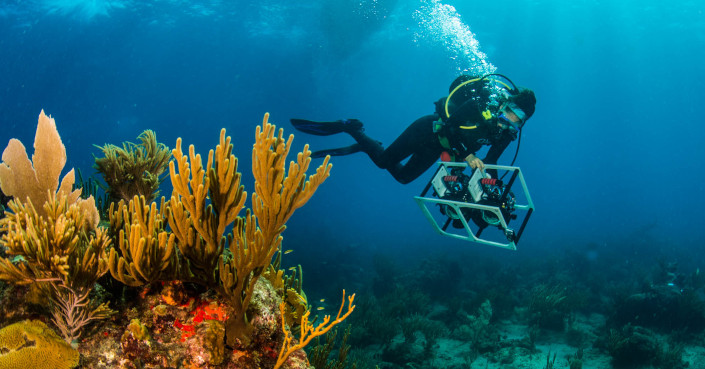

Nicole Pedersen, a staff researcher in the Sandin Lab, collects images of a coral reef in the Caribbean. These images are used to create mosaic plots, which can be turned into 3D models that are analyzed with the help of an AI-assisted tool. (Photo by Ralph Pace)

One of these tools is TagLab, an AI-powered segmentation software that provides annotation and analysis of orthomosaics—detailed maps made up of many smaller images stitched together. The software was developed by the Visual Computing Lab at the Institute of Information Science and Technologies, part of the National Research Council of Italy, in collaboration with Sandin and team members Nicole Pedersen and Clinton Edwards. By matching corals through time using images collected by Scripps researchers during dives at single sites over a period of years, the tool significantly accelerates the process by which the researchers can observe how those corals are growing, shrinking, changing, dying and recruiting.

Through a cross-campus collaboration at UC San Diego, Sandin and his team worked alongside colleagues in the Jacobs School of Engineering to develop a tailor-made machine learning tool that addresses their specific needs. Structural engineer Falko Kuester and researcher Vid Petrovic created Viscore, a custom visualization and image analysis software with pipelines for ecological data extraction, giving researchers in the Sandin Lab the capability to align the time series images that inform their work, generate 3D models of reefs from thousands of underwater photos, and take “virtual dives” to extract additional data that they would otherwise be unable to take during a real dive due to bottom time limitations. The team also uses CoralNet, created more than a decade ago by computer scientist David Kriegman, also in the Jacobs School of Engineering, which uses AI image recognition to identify coral species using small-scale images of the bottom of the coral reef.

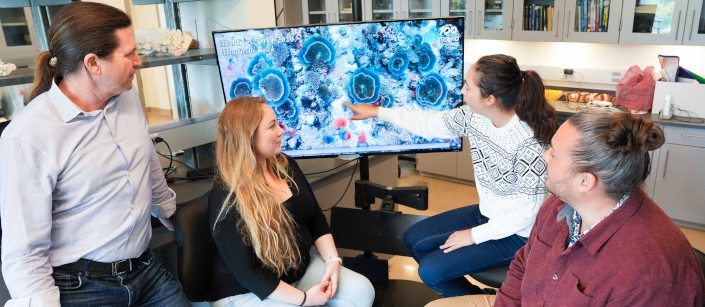

From the left, Stuart Sandin, Beverly French, Nicole Pedersen and Nathaniel Hanna Holloway discuss their observations of coral growth using time-series imagery. (Photo by Sapna Parikh/UC San Diego)

For Sandin, who holds the Oliver Chair in Marine Biodiversity and Conservation at Scripps, it’s important that these tools are viewed in the context of what he refers to as a “human-in-the-loop” approach—using AI to take on the easier, more tedious tasks, while ensuring a scientist’s expertise remains central. He compares it to writing an essay using a generative AI tool like ChatGPT: You might rely on it to write the beginning, but when you get to a deeply analytic part, creativity comes into play and you edit to improve it with your human experience.

While they’ve found that some coral reefs may be more resilient than previously thought, Sandin and French say that just last year, they observed tremendous coral loss on the south side of Palmyra following a large swell event—something scientists say will become increasingly prevalent in coming years. It’s given them a renewed sense of urgency to better understand the effect our changing climate has on reefs, and what we as a society can do about it.

“Now is the part where humans can get involved—where we can take agency to not just let this ecosystem die away but instead to use the tools of ingenuity to make sure that we preserve this bastion of biodiversity,” said Sandin.

Photo by iStock_metamorworks

Accelerating decision-making for climate scientists

Why is Texas experiencing extreme heat waves? And why does El Niño cause temperatures to rise across North America?

While climate studies often explore the short-range correlations that can help answer perplexing questions like these, there’s still a lot we don’t know about cause and effect on a global scale. UC San Diego computer scientist Rose Yu hopes to help change that, and believes the solutions can be found in the vast amounts of data collected by climate scientists, the volume of which has grown exponentially in recent years.

But even with the rapidly growing capabilities of advanced computing, there are so many large and complex datasets available and so many sources from which these data are derived—simulations, predictions, measurements from sensors, satellites, weather balloons and more—that finding those solutions is easier said than done. That’s part of the reason why the global climate models currently being used to predict future temperatures and weather patterns over time are extremely expensive and can take months to run.

Computer scientist Rose Yu speaks to colleagues and attendees at the UC San Diego Scientific Machine Learning Symposium in March 2023. (Photo courtesy of Rose Yu).

“We want to have the results within a week, so that we can really accelerate decision-making for climate scientists,” said Yu, who is an assistant professor in the Department of Computer Science and Engineering at the Jacobs School of Engineering and the Halıcıoğlu Data Science Institute.

Ambitious? Yes. But that’s where artificial intelligence comes in. Thanks to a $3.6 million grant awarded in 2021 by the Department of Energy, Yu and two UC San Diego colleagues, Yian Ma and Lawrence Saul, have teamed up with researchers at Columbia University and UC Irvine to develop new machine learning methods that can speed up these climate models, better predict the future, and improve our understanding of climate extremes.

This work comes at a crucial time, as it becomes increasingly important that we develop an accurate understanding of how climate change is impacting our Earth, our communities and our daily lives—and how to use that newfound knowledge to inform climate action. To date, the team has published more than 20 papers in both machine learning and climate science-related journals as they continue to push the boundaries of science and engineering on this highly consequential front.

To increase the accuracy of predictions—and quantify their inherent uncertainty—the team is working on customizing algorithms to embed physical laws and first principles into deep learning models, a form of machine learning that essentially imitates the function of the human brain. It’s no small task, but it’s given them the opportunity to collaborate closely with climate scientists who are putting these machine learning methods into practical algorithms in climate modeling.

“Because of this grant, we have established new connections and new collaborations to expand the impact of AI methods to climate science,” said Yu. “We started working on algorithms and models with the application of climate in mind, and now we can really work closely with climate scientists to validate our models.”

From predicting extreme weather to enabling disaster response, Yu hopes to see these improved models make an impact on the work of other scientists across UC San Diego, where she believes interdisciplinary collaborations are the key to accelerating scientific advances. She served as one of the organizers of the Scientific Machine Learning Symposium held at UC San Diego in March, which brought together researchers and practitioners at the intersection of AI and science to discuss opportunities to use AI in their work.

“There’s a very broad interest in AI for science across campus,” said Yu, who is serving as a mentor for the Eric and Wendy Schmidt AI in Science Postdoctoral Fellowship. “It’s very important for us as engineers to understand what problems are important to the scientific field so that we’re developing tools that are actually useful for them. During the process, we also learn new challenges that can drive AI methods development. And from the domain science side, they get to learn about the recent developments in algorithms and AI, and that triggers new thoughts of potential.”

“It’s very important for us as engineers to understand what problems are important to the scientific field so that we’re developing tools that are actually useful for them."

Photo by iStock_Toa55

Informing disaster response and mitigation

Fighting wildfires using artificial intelligence: It might sound futuristic, but it’s already underway across California, thanks in large part to the WIFIRE Lab at the San Diego Supercomputer Center.

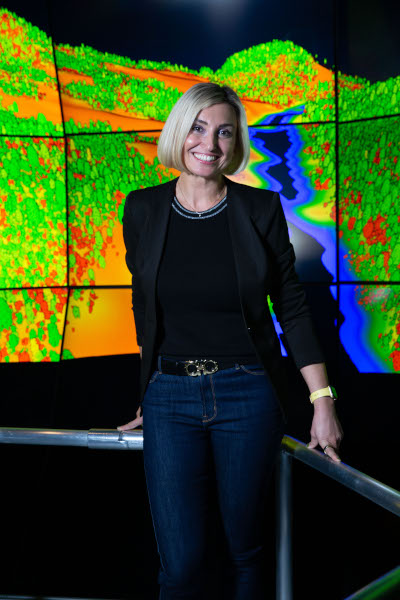

When the center’s Chief Data Science Officer and WIFIRE founding director, Ilkay Altintas, first conceived the idea to develop machine learning algorithms that pull together real-time data to produce “firemaps”—predictive models of a fire’s behavior—she never imagined the success that lay ahead. But as weather extremes become the norm and cities expand toward the wildlands, staying one step ahead of a potentially catastrophic wildfire is more critical than ever—and people’s lives depend on it.

Chief Data Science Officer Ilkay Altintas (Photo courtesy of Ilkay Altintas)

“When we first started doing this, we thought this was going to stay a research exercise and we were maybe going to raise eyebrows. I didn’t imagine, as a scientist, that it would go this far,” said Altintas. “I think both the crisis at hand and also the need for these tools pushed it to a place that we are now super proud of.”

That crisis, of course, is climate change, and with hot, dry conditions projected to intensify over time, Altintas says wildfires are expected to get worse. Since different weather extremes give way to different types of vegetation—the “fuel” that allows these fires to spread—it’s even more vital that stakeholders are equipped with the tools they need to not only respond to fire emergencies, but also to better prevent and predict them.

The WIFIRE Lab’s work in dynamic data-driven fire modeling has enabled fire chiefs and incident commanders to make crucial decisions related to mitigation and containment, and it’s all made possible through the growing capabilities of AI. By pulling together massive amounts of data from sources like aircraft sensors, camera feeds, satellite imagery, topographic maps and weather models to produce these predictive tools, Altintas and her team are making a real impact. The lab’s Firemap platform has become a key element of the state-funded Fire Integration Real-Time Intelligence System (FIRIS), a public-private partnership led by the California Governor’s Office of Emergency Services, that provides real-time intelligence data and analysis on emerging disaster incidents in California.

But while extinguishing wildfires is necessary to protect human life and ecosystems, decades of suppression have given way to a dangerous buildup of vegetation that’s just waiting to power the next megafire. In an effort to extend their cyberinfrastructure, WIFIRE Commons, to enable proactive solutions rather than solely reactive ones, Altintas and her team, in collaboration with many partners including the Los Alamos National Laboratory and the U.S. Forest Service, developed BurnPro3D, a prescribed fire planning tool that combines next-generation fire science with 3D vegetation data and AI. This vital resource gives fire managers the ability to optimize when and where they should conduct prescribed burns, eliminating that excess vegetation in a low-intensity, controlled way.

A firefighter supervises a controlled burn at Marine Corps Base Camp Pendleton, California. (Photo by Sgt. Jake McClung, U.S. Marine Corps)

Researchers at the WIFIRE Lab are now expanding their work beyond wildfires and evolving their approach toward a multi-hazards knowledge platform that will inform officials on other types of natural disasters. This past winter, using data acquired by the FIRIS aircraft, and in collaboration with funding agencies NASA and NOAA, the WIFIRE team assessed post-fire debris flow risk and damage from extreme flooding and snowfall events across California. They’re also working to enable decision support for wildfire response as far as three to five days in advance.

Ilkay Altintas and the WIFIRE team at the San Diego Supercomputer Center. (Photo courtesy of WIFIRE Lab)

Altintas attributes the success of this work in large part to a growing trend called convergence research, built on the idea that society’s largest challenges require collaboration across numerous disciplines and sectors. BurnPro3D, in fact, was made possible by $6 million in funding from the National Science Foundation’s Convergence Accelerator.

“We work with stakeholders from many organizations, bringing together AI experts and fire science experts, along with stakeholders, to understand the gaps—and then we develop solutions around those gaps,” said Altintas.

Also funded by the NSF Convergence Accelerator is the newly launched Convergence Research (CORE) Institute at the San Diego Supercomputer Center, a fellowship that aims to empower the next generation of researchers and scientists to take on complex societal problems through convergence research. This year’s theme is “Tackling Climate-Induced Challenges with AI.” Following a virtual boot camp this spring, 40 early career researchers from around the world will attend an incubator at UC San Diego this summer to further develop their AI strategies for climate-induced challenges.

Altintas believes that this approach is the best way to effect real change.

“Our problems are big and complex and we really need to tackle them together,” she said.

Photo by Sapna Parikh/UC San Diego

Improving prediction of atmospheric rivers

Amid a historic drought, a deluge of rain and relentless storms battered California this past winter, leading to severe flooding across the state and record snowpack in the Sierra Nevada. It’s a prime example of “weather whiplash,” which scientists say will become a more frequent occurrence as our climate continues to warm.

The weather phenomenon behind the bulk of this precipitation? Atmospheric rivers: the long, flowing regions in the sky that carry enormous amounts of water vapor, released over land in the form of rain and snow.

With the potential for high volumes of water to make landfall, preparedness is key. That’s why, in the Center for Western Weather and Water Extremes (CW3E) at UC San Diego’s Scripps Institution of Oceanography, atmospheric scientists like Luca Delle Monache, the center’s deputy director, are using artificial intelligence—in the form of machine learning algorithms—to improve the prediction of atmospheric rivers. The future we’ve been warned about, he says, is already here.

Atmospheric scientist and CW3E Deputy Director Luca Delle Monache. (Photo by Sapna Parikh/UC San Diego)

“We are going to get more intense atmospheric rivers: the more impactful ones, the ones that create a lot of damage,” said Delle Monache. “We’re living in it now—these very intense storms, this record-breaking snow accumulation—may be caused by climate change.”

When the atmosphere is warmer, it can hold more water vapor, which Delle Monache refers to as the “fuel” that powers an atmospheric river. And the more water vapor in the atmosphere, the more intense these storms can be. It’s vital, then, that researchers at CW3E—a global leader in the study and forecasting of atmospheric rivers—are able to accurately predict Integrated Water Vapor Transport, or IVT, which is the key signature variable for determining the presence and intensity of these storms.

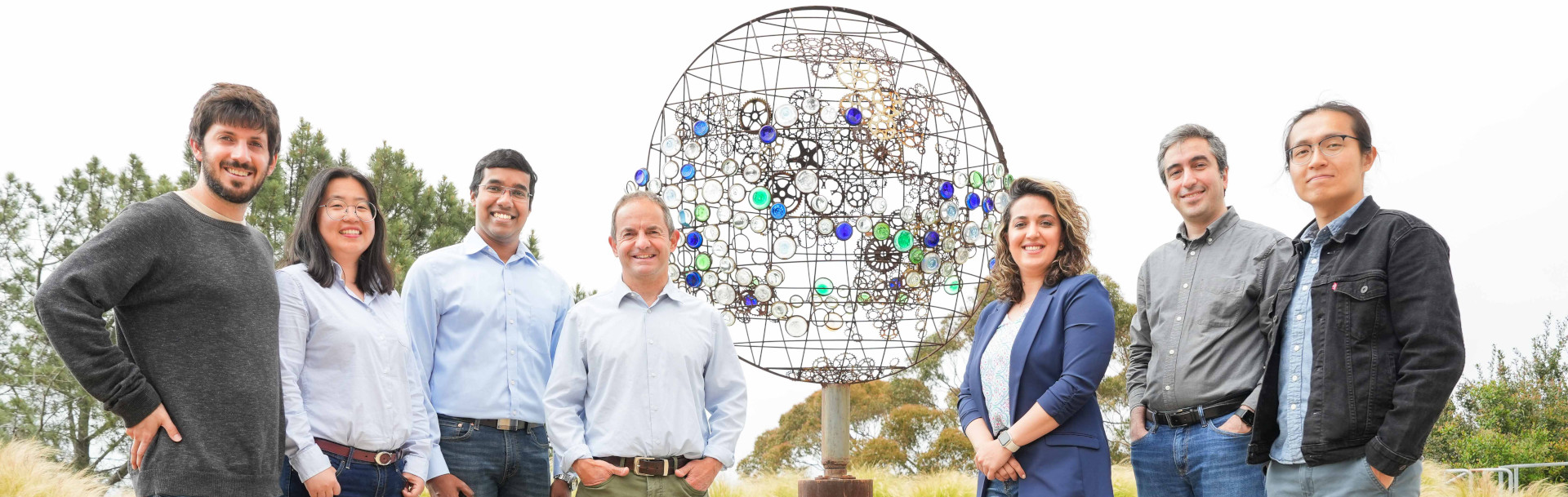

At CW3E, Delle Monache leads the Machine Learning Team, made up of atmospheric scientists and computer scientists who are improving the prediction of IVT by leveraging the power of massive amounts of weather data from the physics-based dynamical models used in forecasting. These models are imperfect, says Delle Monache, because the atmosphere is a chaotic system, meaning that even tiny errors in a forecast’s initial conditions can grow quickly and significantly alter predictability.

By feeding the data from these models and observations into AI algorithms in what is called a “post-processing framework,” Delle Monache and his team are able to improve the predictions they make today based on the errors the model has made in the past. This work is enabled by an agreement with the San Diego Supercomputer Center at UC San Diego, where the CW3E team has exclusive access to its Comet supercomputer—capable of performing nearly 3 quadrillion operations per second.

The CW3E Machine Learning Team. (Photo by Sapna Parikh/UC San Diego)

“The application of machine learning to the dynamical, physics-based model is a game changer,” said Delle Monache, adding that this work has improved the prediction of IVT by as much as 20%. “It’s an exciting time, where we’re really making meaningful improvements and contributions.”

These machine learning-fueled predictions are also informing CW3E’s main program, Forecast Informed Reservoir Operations (FIRO), which advises state water managers as to how much water should be released from reservoirs and when—thereby optimizing water supply and reducing flood risk. With better prediction of precipitation and inflow into the reservoir, researchers at CW3E found they can save approximately 25% more water each year, as evidenced by a pilot project at Lake Mendocino.

“If you can increase the water supply of each reservoir by 25% on average, that’s huge,” said Delle Monache. “We have basically devised a strategy to adapt to climate change. This is a very effective way to preserve water, a very important quantity for our society.”

“The application of machine learning to the dynamical, physics-based model is a game changer. It’s an exciting time, where we’re really making meaningful improvements and contributions.”